Universal Manipulation Interface

In-The-Wild Robot Teaching Without In-The-Wild Robots

1x

1x

1x

1x

We present Universal Manipulation Interface (UMI) -- a data collection and policy learning framework that allows direct skill transfer from in-the-wild human demonstrations to deployable robot policies. UMI employs hand-held grippers coupled with careful interface design to enable portable, low-cost, and information-rich data collection for challenging bimanual and dynamic manipulation demonstrations. To facilitate deployable policy learning, UMI incorporates a carefully designed policy interface with inference-time latency matching and a relative-trajectory action representation. The resulting learned policies are hardware-agnostic and deployable across multiple robot platforms. Equipped with these features, UMI framework unlocks new robot manipulation capabilities, allowing zero-shot generalizable dynamic, bimanual, precise, and long-horizon behaviors, by only changing the training data for each task. We demonstrate UMI’s versatility and efficacy with comprehensive real-world experiments, where policies learned via UMI zero-shot generalize to novel environments and objects when trained on diverse human demonstrations.

Paper

Latest version: arXiv or here.

Robotics: Science and Systems (RSS) 2024

★ Best Systems Paper Award Finalist, RSS ★

Code and Tutorial

Team

1 Stanford University 2 Columbia University 3 Toyota Research Institute * Indicates equal contribution

BibTeX

@inproceedings{chi2024universal,

title={Universal Manipulation Interface: In-The-Wild Robot Teaching Without In-The-Wild Robots},

author={Chi, Cheng and Xu, Zhenjia and Pan, Chuer and Cousineau, Eric and Burchfiel, Benjamin and Feng, Siyuan and Tedrake, Russ and Song, Shuran},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2024}

}Hardware Design

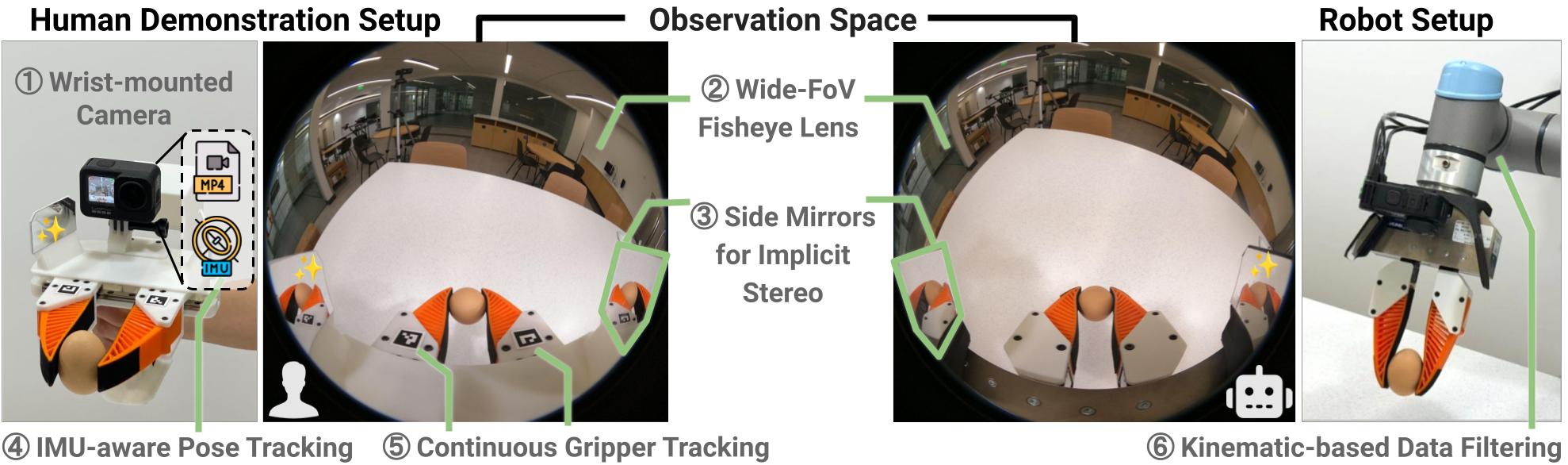

How can we capture sufficient information for a wide variety of tasks with just a wrist-mounted camera?UMI’s data collection hardware takes the form of a hand-held parallel jaw gripper, mounted with a GoPro camera ①.

To gather policy-deployable observations, UMI needs to capture sufficient visual context to infer action ② and critical information like depth ③.

To obtain action data leading to deployable policies, UMI needs to capture precise robot action under fast human motion ④, fine adjustments on griping width ⑤, and automatically check whether each demonstration is valid under the specific robot kinematic constraints ⑥.

Policy Robustness

Enabled by our unique wrist-only camera setup and camera-centric action representations, UMI is 100% calibration-free (functioning even with base movement) and robust against distractors and drastic lighting changes.

Robot Base Movements

Different Lighting Conditions

Perturbations with Other Sauce

Capability Experiments

(1) Dynamic Tossing 🤾

Task The robot is tasked to sort 6 objects by tossing them to the corresponding bin. The 3 spherical objects (baseball ⚾, orange 🍊, apple 🍎) should be tossed into the round bin, while the 3 Lego Duplo pieces go into the rectangular bin.

Ablation Without inference-time observation and action latency matching, the policy exhibits significantly more jittery movements, due to the misalignment between observations and action executions.

Ours

No Latency Matching

(2) Cup Arrangement ☕

Task Pick up and place an espresso cup on the saucer with its handle facing to the left of the robot.

Ablation Data collected by UMI is robot agnostic. Here we can deploy the same policy on both UR5e and Franka robots. In fact, you can deploy it on any robot equipped with a parallel jaw stroke > 85mm.

Ours (UR5)

Ours (Franka)

(3) Bimanual Cloth Folding 👚

Task Two robot arms need to coordinate and fold the sweater’s sleeves inward, then fold up the bottom hem, rotate 90 degrees, and finally fold the sweater in half again.

Ablation Without inter-gripper proprioception (relative pose between the two grippers), the coordination between the two arms is significantly worse.

Ours

No Inter-gripper Proprioception

(4) Dish Washing 🍽

Task For successful dish washing, the robots need to sequentially execute 7 dependent actions: turn on faucet, grasp plate, pick up sponge, wash and wipe plate until ketchups are removed, place plate, place the sponge and turn off faucet.

Ablation The baseline policy trained with ResNet-34 as vision encoder, yielded non-reactive behaviors towards variations in plate or sponge position.

Ours (CLIP-pretrained ViT as Vision Encoder)

ResNet as Vision Encoder

In-the-wild Generalization Experiments

With UMI, you can go to any home, any restaurant and start data collection within 2 minutes.

Human Data Collection

Data Overview

With a diverse in-the-wild cup manipulation dataset, UMI enabled us to train a diffusion policy that generalizes to extremely out-of-distribution objects and environments, even including serving expresso cup on top of a water fountain!

Data Collection Methods

Thanks to its portablity and intuitive design, UMI allows for ⚡ fast data collection at a rate of 30 seconds/demonstration.

Comparison with Other Methods

For the cup arrangement task, UMI gripper is over 3× faster than teleportation, reaching 48% of human hand speed.

111/h

UMI Gripper

231/h

Human Hand

35/h

Teleoperation via Space Mouse

Human Demonstration on Other Tasks Using UMI Gripper

Dynamic Tossing

Cloth Folding

Dish Washing

Acknowledgements

111/h

231/h

35/h

This work was supported in part by the Toyota Research Institute, NSF Award #2037101, and #2132519. We want to thank Google and TRI for the UR5 robots, and IRIS and IPRL lab for the Franka robot hardware. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.

We would like to thank Andy Zeng, Pete Florance, Huy Ha, Yihuai Gao, Samir Gadre, Mandi Zhao, Mengda Xu, Alper Canberk, Kevin Zakka, Zeyi Liu, Dominik Bauer, Tony Zhao, Zipeng Fu and Lucy Shi for their thoughtful discussions. We thank Alex Alspach, Brandan Hathaway, Aimee Goncalves, Phoebe Horgan, and Jarod Wilson for their help on hardware design and prototyping. We thank Naveen Kuppuswamy, Dale McConachie, and Calder Phillips-Graffine for their help on low-level controllers. We thank John Lenard, Frank Michel, Charles Richter, and Xiang Li for their advice on SLAM. We thank Eric Dusel, Nwabisi C., and Letica Priebe Rocha for their help on the MoCap dataset collection. We thank Chen Wang, Zhou Xian, Moo Jin Kim, and Marion Lepert for their assistance with the Franka setup. We especially thank Steffen Urban for his open-source projects on GoPro SLAM and Camera-IMU calibration, and John @ 3D printing world for inspiration of the gripper mechanism.

Contact

If you have any questions, please feel free to contact Cheng Chi and Zhenjia Xu.